How Virtual Try-On Works

AR Rendering Engine Overview

3D Modeling Challenges & Tigervue’s Approach

Data Strategies: Collection, Diversity & Optimization

Rapid Web Deployment: “60 Minutes to Live on Any Site”

Optimizing Neural Networks for Real-Time AR Try-On

Driving the Future of Virtual Try-On

The Technology Powering Virtual Try-On

In today’s competitive e-commerce landscape, brands are adopting advanced solutions to redefine online shopping.

Virtual Try-On (VTO) harnesses cutting-edge computer vision, real-time 3D rendering, and AI-driven fit algorithms to deliver a hyper-realistic, personalized product interaction.

Far more than a novelty, VTO is now a consumer expectation—letting shoppers engage with items as though they were holding them in person.

By understanding the sophisticated mechanics behind VTO, brands can unlock its full power to drive engagement, boost conversions, and elevate customer satisfaction.

How Virtual Try-On Works

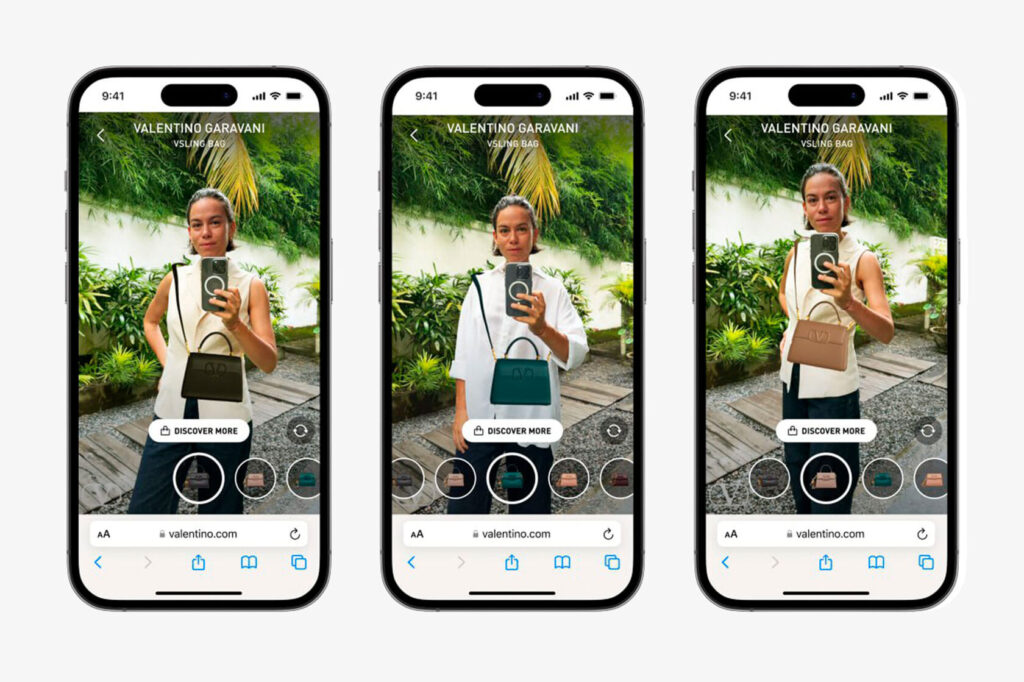

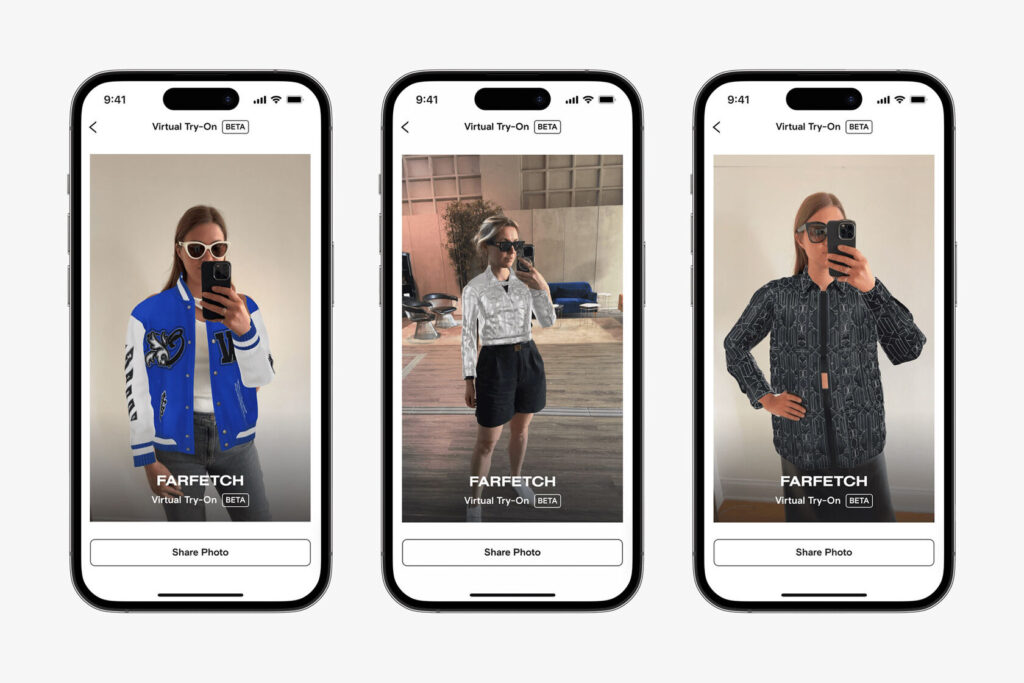

The shift from static 2D product images to interactive 3D visuals has revolutionized e-commerce. Early online stores relied on flat photographs that gave shoppers only a front-facing view of items. As 3D modeling and real-time rendering matured, brands began offering rotatable product views—letting customers inspect finishes, textures, and contours from every angle. Virtual Try-On (VTO) takes this a step further by overlaying 3D assets onto a live camera feed, creating an almost tactile preview of how products will look in real life.

From Static Photos to 3D Product Exploration

- 3D Model Interaction: Customers can spin, zoom, and examine detailed 3D representations of watches, eyewear, footwear, apparel, and more—bridging much of the sensory gap left by 2D photos.

- Augmented Placement: VTO leverages browser-based AR or app frameworks to place virtual products directly onto the user’s image—whether that’s a wrist, face, or room—without extra hardware.

Immersive AR Overlay Mechanics

- Landmark Detection: Computer-vision models (e.g., MediaPipe or custom neural nets) pinpoint facial or body landmarks in each camera frame.

- Model Alignment & Scaling: These landmarks guide the precise positioning and sizing of the 3D asset, ensuring watches sit perfectly on wrists and glasses align snugly on noses.

- Real-Time Motion Tracking: Continuous gesture analysis keeps the overlay locked to the user as they move, turn their head, or shift their body.

- PBR Shading & Lighting: Physically based rendering simulates how real materials—metals, fabrics, gemstones—react to ambient light, producing true-to-life reflections and textures.

The Tech Stack Behind the Scenes

- WebAR & WebXR Frameworks: Low-latency libraries (e.g., 8th Wall, Three.js) deliver immersive AR in any modern browser.

- Machine Learning Refinement: AI models learn from each session—improving landmark accuracy, reducing jitter, and adapting to diverse skin tones and lighting conditions over time.

- Optimized 3D Workflows: High-fidelity CAD designs are retopologized and decimated for smooth performance on both mobile and desktop devices.

AR Rendering Engine Overview

AR try-on experiences demand rendering engines that balance visual fidelity with compact efficiency. Tigervue’s proprietary engine—at just ~2 MB—runs smoothly on both mobile devices and in-browser, delivering real-time, high-quality graphics without sacrificing performance or interactivity.

By leveraging optimized GPU and CPU pipelines, our engine ensures digital overlays—whether jewelry, watches, or apparel—track seamlessly with user movements and ambient lighting. Unlike bulkier game engines (e.g., Unity’s ~20 MB runtime), Tigervue’s solution maintains equivalent image quality across platforms while supporting complex materials like gemstones, metals, and fabrics.

This end-to-end control over texture mapping, lighting models, and shading algorithms allows us to fine-tune every aspect of the visual pipeline—resulting in consistently immersive, lifelike virtual try-on experiences that load fast, run fluidly, and captivate users.

3D Modeling Challenges & Tigervue’s Approach

Creating photorealistic 3D assets for Virtual Try-On involves balancing three critical factors—visual fidelity, performance, and cost. Luxury brands demand exquisite detail and nuanced textures, yet high-resolution models can quickly become too heavy for smooth real-time rendering on standard consumer devices.

- Photorealism vs. Performance: Detailed geometry and PBR textures drive realism but increase file size and draw calls. Tigervue employs mesh decimation, normal-map baking, and adaptive level-of-detail (LOD) techniques to preserve visual quality while keeping models lightweight.

- Scalability & Cost Management: Scaling 3D production across thousands of SKUs can balloon budgets. Our optimized asset pipeline—featuring automated retopology and shader presets—streamlines model creation, reducing manual labor and mitigating cost spikes.

- Browser & Device Constraints: Web browsers impose strict memory and CPU limits, and mobile CPUs/GPUs vary widely in capability. Tigervue’s proprietary rendering engine—just ~2 MB—runs efficiently across platforms, leveraging GPU instancing and compressed texture formats to maintain sub-second load times.

Load Time & User Experience: Slow asset loading directly impacts conversion. Through predictive streaming and asset prioritization, Tigervue ensures that key models and textures load first, delivering a seamless, immersive try-on experience without frustrating delays.

Data Strategies: Collection, Diversity & Optimization

Extensive, high-quality datasets are the cornerstone of an accurate Virtual Try-On (VTO) experience. At Tigervue, we build proprietary collections that span a wide range of body types, product categories (apparel, footwear, eyewear, jewelry, homeware), and material textures. These datasets serve as the training ground for our machine-learning models, which analyze body contours, proportions, and movement patterns to ensure virtual items drape, fit, and respond naturally.

We continuously refine our algorithms through iterative feedback loops—capturing new use cases, lighting conditions, and style variations—to enhance realism and minimize edge-case failures. By owning the end-to-end data pipeline, Tigervue maintains full control over data quality and diversity, resulting in consistent performance across demographics and devices.

Building this level of dataset sophistication is a meticulous, multi-phase process that typically spans six months. The payoff, however, is a robust VTO solution that adapts dynamically to each user, delivering an immersive, accurate preview of how products will look and feel in the real world.

Rapid Web Deployment: “60 Minutes to Live on Any Site”

Tigervue leads the market in Virtual Try-On (VTO) and 3D experiences with a solution engineered for effortless web integration. Perfect for brands without dedicated apps, our lightweight SDK installs in just 60 minutes—no downloads required—and works seamlessly across all modern browsers. Once live, shareable links let you promote your immersive VTO on Instagram, TikTok, WeChat, email campaigns, and more, delivering true-to-life 3D previews that captivate audiences and expand your digital reach.

Optimizing Neural Networks for Real-Time AR Try-On

Running neural networks on mobile devices is a cornerstone of Tigervue’s AR Try-On, but it presents two major challenges: compute load and rendering performance.

- Frame Compression for Speed: We downscale each 2K–4K camera frame to a 192 px resolution—reducing the input size by up to 20×—so our tracking models run at real-time speeds on standard smartphone processors.

- Lightweight Model Architecture: To fit within mobile compute limits, we employ streamlined neural network topologies and quantization techniques, delivering high-quality landmark detection and pose estimation without draining battery or causing latency.

- Mobile-Optimized Rendering Engine: Our compact (~2 MB) render pipeline is tuned for fast FPS on diverse devices. By balancing texture compression, LOD switching, and shader complexity, we maintain smooth visuals even on entry-level phones.

Driving the Future of Virtual Try-On

Virtual Try-On transforms online shopping by forging emotional connections and delivering immersive, lifelike interactions in real time. Powering this experience requires sophisticated investments in augmented reality, machine learning, and optimized rendering engines—all working seamlessly behind the scenes to track, render, and align virtual products with user movements. By mastering the “rocket science” of VTO—from data pipelines and neural-network optimization to lightweight, high-fidelity visuals—brands gain the technical prowess to delight customers, reduce returns, and earn a competitive edge in today’s dynamic e-commerce landscape.